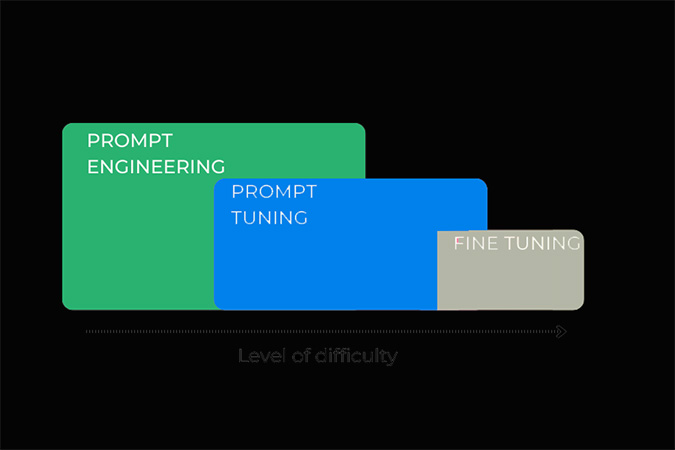

In the world of natural language processing (NLP), constant efforts are made to improve the efficiency and performance of language models. With the introduction of Prompt Engineering for Fine-Tuning (PEFT), researchers sought to find an efficient way to update the weights of a language model without having to retrain every single parameter from scratch. One of the methods within PEFT that has gained significant attention is fine-tuning which is also know as “Prompt Tuning.” In this article, we will explore the concept of Fine-Tuning vs. Prompt Tuning vs. Prompt Engineering, benefits of them, and its potential impact on language models.

Prompt Tuning is a techniques by Prompt Engineering for Fine-Tuning (PEFT) to learn about PEFT: click here

Table of Contents

Introduction:

In the ever-evolving landscape of artificial intelligence, training models to generate human-like text has become a focal point of research and development. Among the many techniques employed, fine-tuning vs. prompt tuning vs. prompt engineering have emerged as prominent methods. In this article, we delve into these strategies, exploring their nuances, advantages, and applications in the realm of AI.

What is Fine-Tuning?

Fine-Tuning is a technique used to adapt a pre-trained language model to perform specific tasks or follow specific guidelines. It involves taking a pre-existing model, such as GPT-3, and training it further on a narrow dataset. This dataset is typically domain-specific and tailored to the desired application.

Pros of Fine-Tuning

- Domain-specific expertise: Fine-tuning allows for the injection of domain-specific knowledge into a model, making it excel in particular tasks.

- Task versatility: A fine-tuned model can be adapted for various tasks, from chatbots to content generation, making it a versatile tool.

Cons of Fine-Tuning

- Data requirements: It necessitates a substantial amount of high-quality training data, which may not always be readily available.

- Overfitting risk: Fine-tuning too aggressively can lead to overfitting, where the model becomes too specialized and performs poorly on unrelated tasks.

The Power of Prompt Tuning

Prompt Tuning is a method that revolves around crafting the right input prompt to obtain desired outputs from a language model. Rather than training the model itself, it focuses on optimizing the input provided to the model.

Pros of Prompt Tuning

- Efficiency: Prompt tuning is efficient in terms of both time and resources, as it doesn’t require extensive retraining of the model.

- Control: It offers more control over the model’s responses by fine-tuning the prompts to elicit specific information or styles.

Cons of Prompt Tuning

- Limited adaptability: Prompt tuning may not be as effective for entirely novel tasks or domains where pre-existing prompts might not suffice.

- Complexity: Crafting the perfect prompt can be a nuanced task, requiring expertise in NLP and creative thinking.

What is Prompt Engineering ?

Prompt Engineering is a hybrid approach that combines elements of fine-tuning and prompt tuning. It involves crafting specialized prompts and then fine-tuning the model on these prompts to achieve desired results.

Pros of Prompt Engineering

- Flexibility: It offers a balance between the versatility of fine-tuning and the precision of prompt tuning.

- Optimization: Prompt engineering allows for continuous optimization by refining prompts and fine-tuning as needed.

Cons of Prompt Engineering

- Resource-intensive: Like fine-tuning, prompt engineering demands substantial resources, both in terms of data and computation.

- Complexity: Managing and optimizing prompts, as well as the fine-tuning process, can be complex and time-consuming.

Comparison Table of Fine-Tuning vs. Prompt Tuning vs. Prompt Engineering:

| Aspect | Fine Tuning | Prompt Tuning | Prompt Engineering |

| Method | Model retraining | Crafting input prompts | Hybrid approach |

| Efficiency | Resource-intensive | Efficient in time/resources | Moderate resource use |

| Data Requirement | High-quality domain data | General input prompts | Combination of both |

| Control Over Output | Limited | High control | Moderate control |

| Adaptability | Domain-specific | Task-specific | Balanced versatility |

| Complexity | Moderate | Low | Moderate |

| Versatility | Limited | Limited | High |

Choosing the Right Approach

So, the question arises: which approach should you choose for your specific needs? The answer depends on various factors, including your project goals, available resources, and expertise.

- Fine-Tuning is ideal when you have ample domain-specific data and want a model to excel in a particular field.

- Prompt Tuning is efficient when you require quick and controlled responses without extensive model training.

- Prompt Engineering strikes a balance between versatility and precision, making it suitable for a wide range of applications.

Real-World Applications for Fine-Tuning vs. Prompt Tuning vs. Prompt Engineering

Now, let’s explore some real-world applications of these techniques to highlight their practical significance:

Content Generation

- Fine-Tuning: It can be used to train models for generating high-quality content in specific niches, such as healthcare or finance.

- Prompt Tuning: For generating content on-the-fly, like chatbot responses or creative writing, prompt tuning can be highly effective.

- Prompt Engineering: This approach is ideal when you need both quality and adaptability in content generation, such as in e-commerce product descriptions.

Language Translation

- Fine-Tuning: To achieve state-of-the-art translation models for specific language pairs, fine-tuning can be invaluable.

- Prompt Tuning: When you need quick translations without extensive training, crafting optimized translation prompts is the way to go.

- Prompt Engineering: For versatile translation services that can handle various language pairs and dialects, prompt engineering shines.

Examples of Real-World Applications

Fine-Tuning in Healthcare:

In the healthcare sector, fine-tuning AI models for diagnosing diseases based on medical images has shown promising results. The precision achieved through fine-tuning aids in accurate diagnosis and treatment recommendations.

Prompt Tuning in Creative Writing:

Authors and content creators use prompt tuning to overcome writer’s block and generate new ideas. By crafting prompts that stimulate creativity, they harness AI to assist in the creative process.

Prompt Engineering for Customer Support:

Many companies employ prompt engineering to enhance their customer support chatbots. By structuring the conversation and defining acceptable responses, businesses ensure a consistent and helpful customer experience.

Conclusion

Fine-tuning vs. prompt tuning vs. prompt engineering each have their unique strengths and applications in the world of AI. Fine-tuning offers precision, prompt tuning provides flexibility, and prompt engineering gives control. The choice of method depends on the specific requirements of the task at hand.

To sum it up, when it comes to optimizing your AI-powered content generation, understanding and wisely applying these techniques can indeed set you on a path to leave other websites behind. Explore the possibilities, fine-tune your strategies, and engineer the perfect prompts to propel your online presence to new heights.

FAQs:

Q. What is fine-tuning in AI?

Fine-tuning in AI involves taking a pre-trained model and adapting it to perform a specific task with greater accuracy. It refines the model’s capabilities for a particular application.

Q. What are three types of prompt engineering?

Here are the three types of prompt engineering as bulleted points:

- Template-Based Prompt Engineering: Involves predefined templates for structured prompts.

- Constraint-Based Prompt Engineering: Imposes limitations on AI model outputs.

- Contextual Prompt Engineering: Craft prompts to provide relevant context for better understanding.

Q. How does prompt tuning work?

Prompt tuning focuses on optimizing the input prompts or queries given to AI models. Crafting precise prompts helps elicit informative and contextually relevant responses.

Q. What is the main advantage of prompt engineering?

Prompt engineering provides control over AI-generated content by structuring the input and defining guidelines. This is particularly valuable in applications where maintaining specific standards is essential.

Q. Which AI training method is best for creative tasks?

Prompt tuning is well-suited for creative tasks as it allows for the generation of diverse and imaginative content by crafting the right prompts.

Q. How can businesses benefit from prompt engineering?

Businesses can enhance customer support and chatbot interactions by employing prompt engineering to ensure consistent and helpful responses.

Pingback: What is Parameter Efficient Fine-Tuning (PEFT) - Coding Example - AlliKnows

Pingback: What are Large Language Models (LLM)- Architecture Explained - AlliKnows