The risk and potential fallout from data breaches continue to rise. To add to this complexity, the regulatory environment surrounding data privacy and protection is becoming increasingly strict. Non-compliance can result in severe penalties, legal battles, and significant damage to a brand’s reputation.

Discover the complete guide on data tokenization. Learn why it’s essential, its benefits, and how to implement it effectively. Unravel the mysteries of data protection!

Table of Contents

Introduction

In today’s digital age, the protection of sensitive information is paramount. With the rise in data breaches and cyber threats, understanding data tokenization has never been more crucial. This comprehensive playbook will shed light on data tokenization, its importance, and how to employ it effectively. Let’s dive into the world of data security and unveil the strategies for safeguarding valuable information.

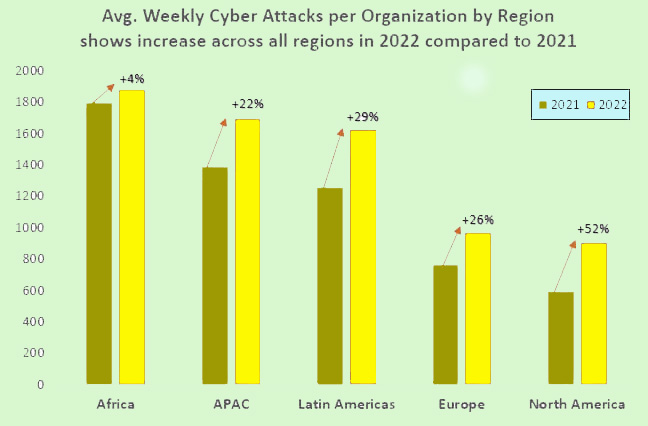

Cyberattacks on the Rise

Cybersecurity is a top-of-mind issue for business leaders worldwide. The most recent data breach cost report published by IBM is the Cost of a Data Breach Report 2023, which includes data from breaches that occurred in 2022. This report found that the global average cost of a data breach reached $4.45 million in 2022, a 15% increase over 2020.

The exponential growth in cyber threats, as highlighted in PwC’s CEO survey, reveals that nearly half of CEOs consider cyber risks their primary concern. The numbers are alarming, with 2021 witnessing a staggering 70% increase in significant data breaches compared to the previous year. High-profile incidents, including those involving Facebook, JBS, Kaseya, and the Colonial Pipeline, underscore the scale and impact of these breaches.

What is Data Tokenization?

Data tokenization is not just a buzzword; it’s a formidable shield against data breaches. But what exactly is it? In essence, data tokenization involves the substitution of sensitive information with unique tokens or symbols, rendering the original data indecipherable. These tokens have no inherent meaning and are practically impossible to reverse-engineer, offering a robust defense against unauthorized access.

Data tokenization is a process that transforms sensitive information into non-sensitive tokens. Essentially, it replaces data with randomly generated characters, making it indecipherable to those without proper authorization.

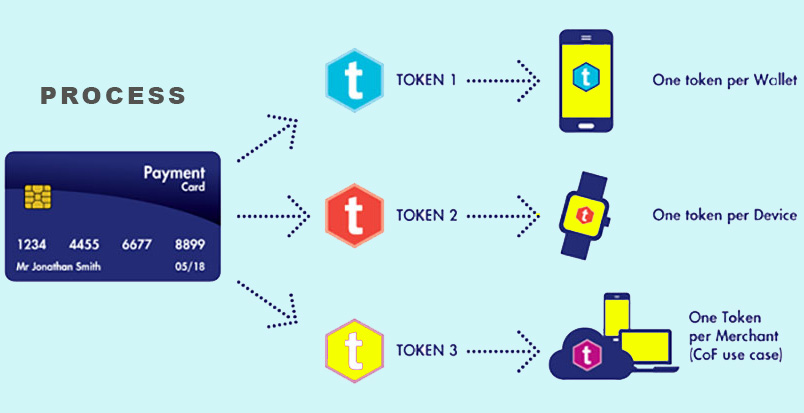

Tokenization is like if you want to store sensitive information you generate token of it and then store that token instead of the sensitive information itself and the real information is stored in a more secure database or place.

For Example, in terms of credit card information, what you do is you generate token of the credit card and store that token in your database while the original info stays at the bank. So whenever you need to make transection you simply query the token and you authenticate your self and the transaction completed.

The Need for Data Tokenization

In today’s digital landscape, the protection of sensitive data has become a paramount concern for businesses. With the ever-increasing frequency and cost of data breaches, the need to safeguard valuable information has never been more critical.

The need for data tokenization has never been more pressing. As businesses navigate legacy application modernization and adapt to the realities of remote work, the risk and potential fallout from data breaches continue to rise. To add to this complexity, the regulatory environment surrounding data privacy and protection is becoming increasingly strict. Non-compliance can result in severe penalties, legal battles, and significant damage to a brand’s reputation.

To Tokenize or Not to Tokenize ?

The question arises – should your business embrace data tokenization? The answer is a resounding yes. Data tokenization offers a proactive approach to data security, safeguarding your organization against evolving threats. By implementing this technology, you not only mitigate the risk of breaches but also demonstrate a commitment to data privacy and regulatory compliance.

Understanding Data Tokenization

Like Data Masking, Data tokenization is a cutting-edge technique used to protect sensitive data. It involves the substitution of sensitive data with a non-sensitive counterpart, known as a token. This token is typically a randomized string of characters that bears no resemblance to the original data. Here’s why it’s a game-changer:

- Enhanced Data Security: Data tokenization fortifies data security by making it nearly impossible for unauthorized individuals to decipher the information. Even if a breach occurs, the stolen data remains useless to hackers due to the tokenization process.

- Compliance with Regulations: In an era of stringent data protection laws, data tokenization helps businesses stay compliant. Whether it’s GDPR, HIPAA, or PCI DSS, tokenization aids in meeting regulatory requirements.

- Versatility in Data Handling: Tokenization doesn’t just protect data; it also allows for seamless data processing. Businesses can use tokens for various operations without compromising security.

- Minimized Data Breach Impact: In the unfortunate event of a data breach, the impact is significantly reduced as tokens hold no intrinsic value. This minimizes reputational damage and financial losses.

Implementing Data Tokenization

Tokenization is a versatile strategy applicable across various industries. Here’s how you can implement it effectively:

- Identify Sensitive Data: The first step is to identify the data that requires protection. This includes credit card numbers, social security numbers, and other personally identifiable information (PII).

- Choose a Tokenization Method: Select a tokenization method that suits your needs. Format-preserving tokenization retains the data format, making it suitable for databases and applications. Secure hashing algorithms are another option for one-way tokenization.

- Secure Key Management: The security of tokenized data relies on robust key management. Ensure that encryption keys are stored securely, and access is limited to authorized personnel.

- Regularly Monitor and Update: Continuous monitoring and periodic updates are essential to maintain data security. Keep up-to-date with the latest tokenization techniques and security practices.

5 Top Benefits of Data Tokenization

Data tokenization offers a myriad of benefits:

- Minimized Risk: Reduced risk of data breaches and fraud.

- Efficient Data Handling: Streamlined data processing with tokens.

- Regulatory Compliance: Easy adherence to data protection regulations.

- Enhanced Customer Trust: Increased customer confidence due to improved data security.

- Cost Savings: The implementation of data tokenization is a cost-effective solution in comparison to the potential financial fallout of a data breach.

Data Tokenization Use Cases

Data tokenization has a broad range of applications, from financial services to healthcare. In finance, it secures payment card data, while in healthcare, it protects patient records. The technology’s versatility makes it an asset to any industry looking to bolster data security.

Some common use cases include:

- Payment Processing: Tokenization is frequently used in the payment industry to protect credit card information during transactions.

- Healthcare: Medical records are highly sensitive. Tokenization ensures that patient data remains confidential and secure.

- E-commerce: Online retailers can use tokenization to protect customer information, such as addresses and payment details.

Differences Between Encryption vs. Data Tokenization

- Purpose and Usage: Encryption is primarily used to protect data during transmission or when it’s stored, ensuring confidentiality. Data Tokenization, on the other hand, is often used to safeguard sensitive information within databases and systems.

- Security Aspects: While both methods enhance security, encryption can be more secure as the data is unreadable without the proper decryption key. In tokenization, the security relies on the protected database and the security of the tokenization system.

- Performance and Speed: Encryption can be resource-intensive, potentially affecting system performance, while tokenization is generally faster and more efficient for data retrieval and processing.

Frequently Asked Questions

Q: What is the difference between data encryption and data tokenization?

Data encryption transforms data into a different format, which can be reversed with the right decryption key. Data tokenization, on the other hand, replaces data with tokens that have no reversible relationship to the original data.

Q: Is data tokenization suitable for small businesses?

Yes, data tokenization can be tailored to the needs of small businesses. It offers cost-effective data security and compliance with regulations.

Q: How do I choose the right tokenization method for my business?

The choice of tokenization method depends on the type of data you need to protect and your data processing requirements. Consult with a security expert to make the best selection.

Q: Can tokenized data be reversed?

Tokenized data cannot be easily reversed, as it doesn’t bear a direct relationship to the original data. It’s a one-way process.

Q: What are the key challenges in implementing data tokenization?

The main challenges include ensuring secure key management, selecting the appropriate tokenization method, and keeping up with evolving security practices.

Q: Does tokenization impact system performance?

Tokenization is designed to be efficient and should not significantly impact system performance if implemented correctly.

Conclusion

In a digital landscape where data security is paramount, data tokenization stands as a powerful guardian of sensitive information. By replacing valuable data with tokens, it ensures that even if a breach occurs, the data remains protected. Implementing data tokenization not only reduces risk but also demonstrates a commitment to compliance with data protection regulations. Embrace this advanced technique to fortify your data security and bolster customer trust.