What is Low-Rank Adaptation (LoRA) – How it works Example Code

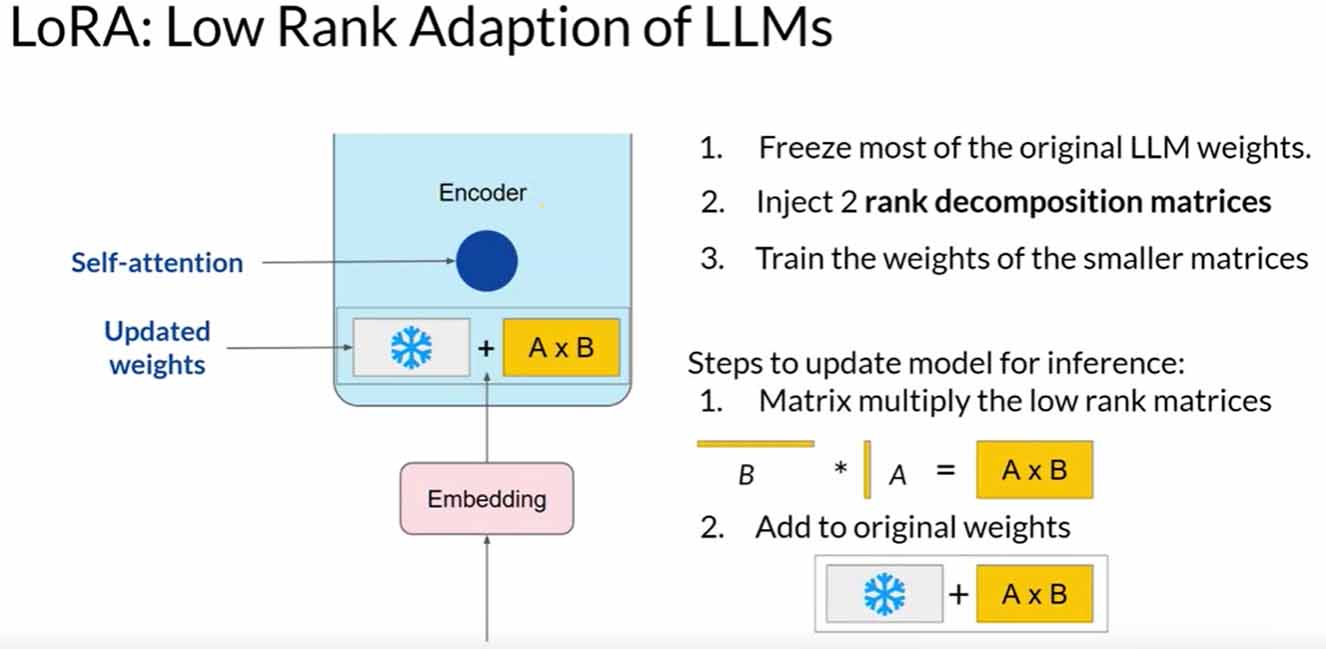

LoRA aims to reduce the number of trainable parameters during fine-tuning. It involves freezing all original model parameters and introducing a pair of rank decomposition matrices alongside the weights.